What Are We?

As the digital and real merge, the question is who or what are we interacting with.

In the latest season of Black Mirror, the episode ‘Beyond The Sea’, Aaron Paul’s character gives us a glimpse of something much closer to reality than the episode itself might suggest. People these days aren’t what they seem. Or to put it another way: it is becoming increasingly difficult to tell apart the real from the digital, and the humans from the bots.

We spend hours in virtual spaces, whether it’s work calls on Zoom, whether thats on social media, video calls or VR events. And our interaction with customer service bots is also increasing, with ‘helpful tech’ seamlessly integrated into our everyday lives, for better or worse.

The line between real and virtual is blurring. Our lives exist in multiple places at once. And who or what we interact with are increasingly harder to tell apart. It is a unique feature of this moment in time.

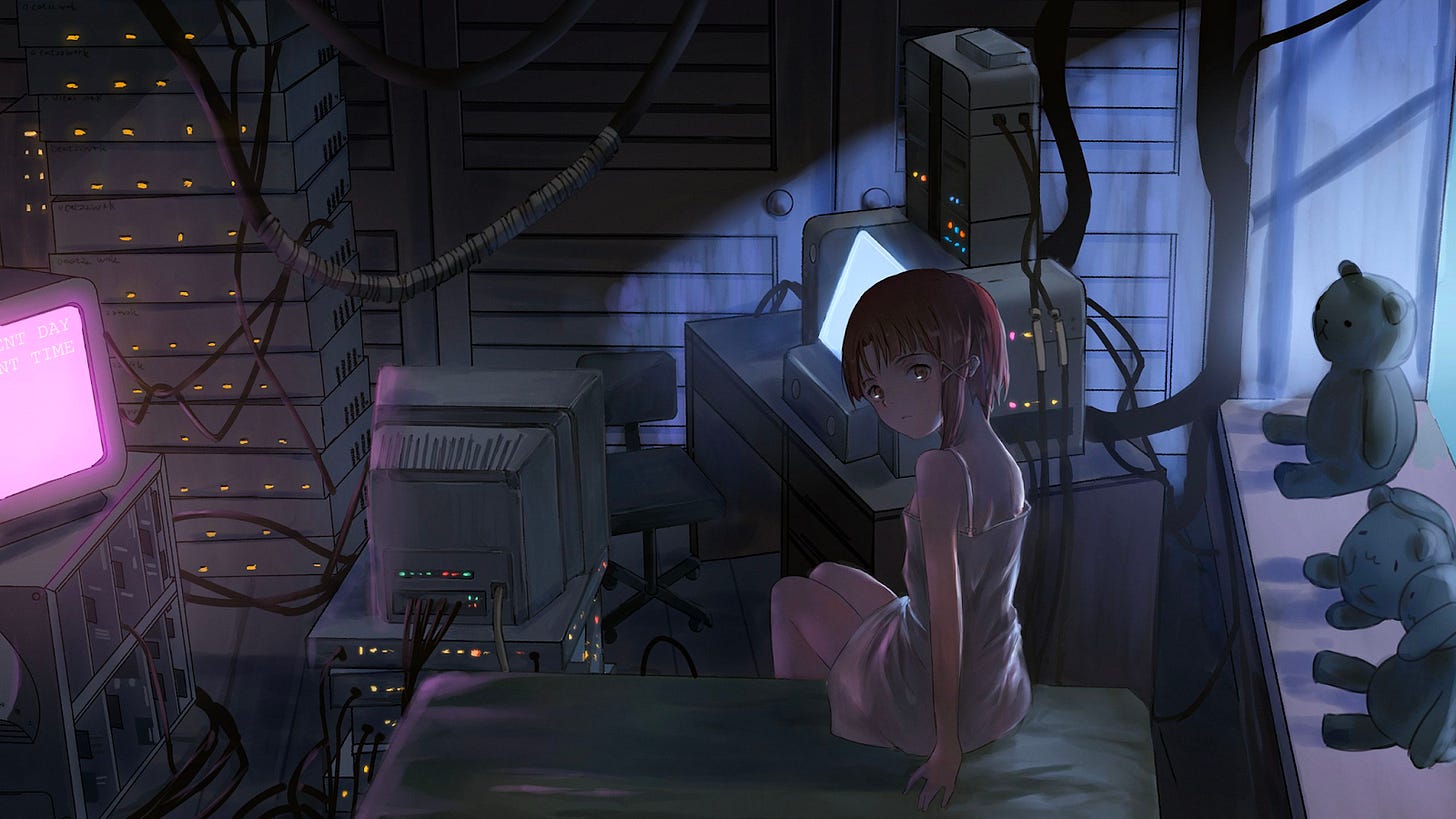

We absorb an extraordinary amount of information, and all of that information is also absorbed by the machine. The closest to foreseeing our obsession with social media was the anime series, Serial Experiments Lain, which follows a fourteen year old girl who gets consumed with ‘The Wired’, a virtual globally connected space. It is one example of popular culture predicting and shaping advancements like Artificial Intelligence.

Uniquely at this moment in time, AI is starting to become indistinguishable from human. Think of the person who won an art competition using AI image generator Midjourney, or the woman who’s photograph was disqualified from a competition because judges suspected it was AI-made, even though it wasn’t. Southpark creator Trey Parks co-wrote the episode ‘Deep Learning’ using (and crediting) ChatGPT, while Hollywood goes on strike, in part, due to AI-creep into the creative process. Meanwhile, Canadian musician Grimes is offering to split 50% royalties on any successful AI generated song that uses her voice. Whereas, Irish musician Hozier has threatened to join the picket lines over the potential threat of AI to the music industry.

This is AI’s moment and things are getting all mixed up. But it’s not new. ELIZA, initially developed in the 1960’s to stimulate human conversation, has had a revival as a therapybot in the past decade, spawning an indie game by the same name where you train AI therapists by talking to them. At a time when Boston Mechanics were making agile robot ‘dogs’, Hollywood was starting to revive popular science fiction narratives exploring how humans would handle android sentience. These narratives examine the concept of avatars who are fully autonomous, driven by AI without human supervision, living among ordinary humans.

These ‘avatars’ offer advanced interactions and can perform complex tasks and communicate with ‘the cloud’, and raise concerns about safety, ethics, and accountability. But although these humanoid AI beings may seem hypothetical, but they aren’t that far away.

“Your Next Operating System Will Look Like You, Make You Laugh and Remember” said the team at Silicon Valley based startup Fable. Interacting with something like Amazon Alexa involves a physical avatar already exist, in the form of Magic Leap’s app Mica to name just one.

In 2013 BabyX was born. An endeavour by Dr. Mark Sagar at the University of Auckland that birthed a virtual infant capable of learning and interacting just like a human baby. From this pioneering work, Soul Machines emerged, providing lifelike virtual assistants and customer service agents rooted in the essence of BabyX's technology. If you’ve engaged with a website pop-up chatbot, you’ve likely talked to a protege of BabyX.

Combine BabyX and Boston Mechanics and you end up with Ai-Da, the "world's first ultra-realistic humanoid robot artist", or Grace, the "COVID-19 nursing assistant robot", or Ami Yamato, the “world’s first virtual influencer”. Discussion around the trend of these service robots being predominantly female by design is for another time, but what Ai-Da, Grace, Ami and their counterparts show is that we are perhaps not as far from walking, talking artificial intelligence as one may think. And, if that’s the case, how would you treat AI in human form?

But as telephone bots turn into deepfake videobots like Synthesia, the lines between real and fake blur. Obviously, no-one, human or otherwise, should have to deal with abuse. Right? Just because something isn’t ‘real’ doesn’t mean humans can’t or won’t have compassion for it. Saying “thank you” to Alexa is a knee-jerk reaction many people have. And if it didn’t come naturally, in 2018 Amazon introduced a ‘Magic Word’ feature, to help train you (or your kids) to ask nicely.

Alexa and Siri are forms of AI, controlled by humans for the benefit of humans. Sure, sometimes Alexa will turn the lights off for no reason, or Siri will out-of-the-blue stop understanding your accent, but for the most part, these tools help make our lives that little bit easier. We’re more HER than HAL right now. So much so, last year a man fell in love with and married a Replika AI chatbot.

Now, that may be extreme, but is it that unusual to fall in love with an avatar? We Met In Virtual Reality (2022) explores the meet-cutes of real people who fell in love behind an avatar. Engaging with a well trained, well written NPC can be fun in VR, but there’s something magical when that avatar you’re engaging with is a real person who can see you in your avatar form.

Much talk has been had about the VR boom during the pandemic. What hasn’t been much spoken on is why, or what impact that virtual engagement was having on people who were isolated from their communities and regular socialisation. Throughout 2020, Tender Claws hosted various live events and performances within ‘The Under Presents’, designed to keep players engaged and entertained while maintaining a sense of community in a virtual space. They hired actors in headsets, quarantined at home, to not only act out The Tempest, but to intermittently explore the game and engage with players. Without the ability to talk, some would take the time to playfully show users around, communicating through hand gestures alone. At a time when usual human connection is prohibited, a virtual hug from a real person can be a powerful thing.

We’re on a journey with AI, which, though captivating, unfurls the delicate balance between the potential benefits and challenges of a world immersed in interactions with both humans and AI, indistinguishable from each other. And it raises the urgent question we should all be asking ourselves: who are we?

How will we know who we are speaking to, at any one time? How can we tell the difference between human and bot, and what are those differences? Because, honestly, everything is getting all mixed up.

Thinking about the different type of interactions we might have in virtual or inline spaces brought us to this list. We’re curious if you know of any others.

Avatars representing real people in virtual spaces

These avatars are like digital doubles, allowing us to interact, work, or hang out without actually being there. While these avatars can help break down social barriers, they also bring challenges like privacy concerns and potential miscommunications. Think GTA Online, VRChat, Fortnite, Second Life, you get the idea. So far so good.

AI-controlled avatars supervised by humans to help humans

Think Joaquin Phoenix talking to his phone in HER, talking to an Alexa, or Siri, or Soul Machines as mentioned above. In this setup, AI-generated avatars that look sort of human, and operate within a set of strict constraints, help with customer service, sales, or entertainment, while human operators keep an eye on them to ensure quality. It's a mix of AI efficiency and human insight, but it might cause confusion about the avatar's identity and abilities. Whats key here is you never don’t know you’re talking to a bot. There’s no confusing. Still, there are questions about responsibility and accountability for both AI and human supervisors.

Avatars representing pre-recorded human interactions

Bots that look like people. These avatars use scripted, pre-recorded human interactions. This makes them them less adaptable, or responsive. They can provide info or basic help but might be less engaging if users go off-script. Think of any NPC character you’ve ever met in a game. Think of The M.C. in The Under. Synthesia. The challenge is to create a genuine, responsive experience despite the avatar's limitations. These avatars can make influential figures and unique experiences more accessible, help onboard or welcome visitors to an experience, foster inclusion.

Avatars pretending to be AI but controlled by humans

This is a weird one, but think Westworld, or influencers like Lil Miquela & Bermuda, or previous examples like Grace & Ai-da. In this case, people are hired to impersonate AI entities, usually for entertainment, to perform specific tasks, or social engineering. These avatars can bridge language and cultural gaps with real-time translation and cross-cultural interactions. The challenge is keeping up the AI illusion while delivering a satisfying experience. Users might feel deceived if they learn about the human behind the avatar, leading to trust issues and legal concerns. In some cases we’ve seen concerns around cultural appropriation when these avatars are of an ethnic background but are run by people or brands that don’t represent that ethnicity. And it gets more complicated when these avatars are made for financial gain. Someone's often making money, and we frequently see issues which are rooted in capitalism (oppression, racism, sexism, homophobia) replicated through avatars, AI and within virtual spaces.

AI-controlled bots or avatars supervised by humans

This category combines AI-driven avatars with human oversight, allowing them to operate autonomously within certain boundaries. Human supervisors can step in when needed, ensuring avatars stay within ethical and legal limits. Mid Journey is a good example, as you put in the prompt, but then it creates an image with its own interpretation, over which you have limited control. Or more simply, ChatGPT. A more interesting example is HAL, from Kubric’s 2001 A Space Odyssey. This setup balances AI efficiency and minimises human input, which is limiting in some ways, but might still present challenges regarding the avatar's identity and assessing human involvement and responsibility. And as we saw with HAL, or Asimov’s I, Robot, there are many unknowns in terms of how humans and AI interpret things differently. There’s a difference between subjective human logic and absolute robot logic.

Avatars representing unsupervised AI:

At the top of the pile are avatars (often representing larger systems) which are fully autonomous, driven by AI without human supervision. They offer advanced interactions and can perform complex tasks but raise concerns about safety, ethics, and accountability. This is the ‘AI out of control’ scenario so often explored in our popular entertainment. The more utopian vision shows hyper-efficient systems that manage the world for human benefit. A more dystopian view is where it goes wrong. Think Skynet, from Terminator 2, embodied by Arnold. Or Agent Smith in The Matrix. Key here any human interpretation of an ethical framework is missing, so its essential the has full AI trust and transparency with users it interacts with, avoiding any situations where the AI might cause harm or make controversial decisions.

The take away from us is that as the real and virtual worlds start to merge and we spend more time in virtual spaces the line between human and AI blurs.

In these murky waters, we must cultivate an ethical evolution. One where we grapple with our own identity, our interactions with AI, and our understanding of the moral fabric of a world interwoven with digital entities.

Navigating this journey, we wrestle with identity, sentience, autonomy, and morality. It is fascinating, and perhaps at times terrifying. Our future with AI echoes past challenges faced by humanity, but have we learned the right lessons?